They suffer from the same problems as the joint-probability in that they treat theĭata as nominal and assume no underlying connection between the scores. Works for any fixed number of raters, are statistics which also take into account the amount of agreement thatĬould be expected to occur through chance. Kappa statistics Main articles: Cohen's kappa, Fleiss' kappaĬohen's kappa, which works on two raters, and Fleiss' kappa, an adaptation that Another problem with this statistic is that itĭoes not take into account that agreement may happen solely based on chance. This however assumes that the data is entirely nominal. 5) is assigned by each raterĪnd then divides this number by the total number of ratings. This simply takes the number of times each rating (e.g. The joint-probability of agreement is probably the most simple and least robust measure.

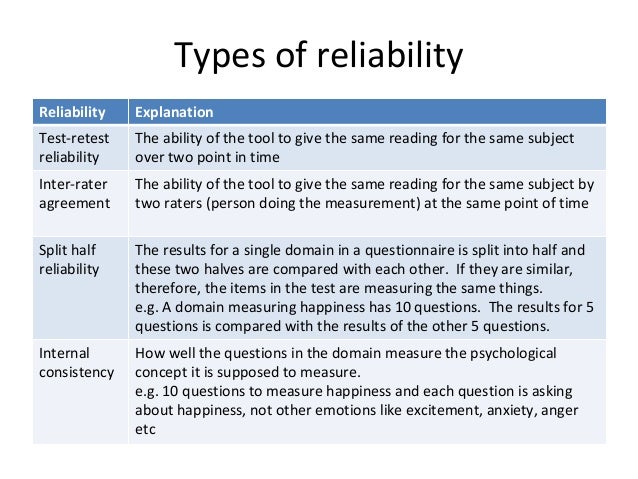

Of agreement, Cohen's kappa and the related Fleiss' kappa, inter-rater correlation, and intra-class correlation. Some of the various statistics are joint-probability Statistics are appropriate for different types of measurement. There are a number of statistics which can be used in order to determine the inter-rater reliability. The metrics given to human judges, for example by determining if a particular scale is appropriate for measuring a It givesĪ score of how much homogeneity, or consensus, there is in the ratings given by judges. Inter-rater reliability or Inter-rater agreement is the measurement of agreement between raters.

0 kommentar(er)

0 kommentar(er)